Bird’s Eye View (BEV) is a popular representation for processing 3D point clouds, and by its nature is fundamentally sparse. Motivated by the computational limitations of mobile robot platforms, we create a fast, high-performance BEV 3D object detector that maintains and exploits this input sparsity to decrease runtimes over non-sparse baselines and avoids the tradeoff between pseudoimage area and runtime. We present results on KITTI, a canonical 3D detection dataset, and Matterport-Chair, a novel Matterport3D-derived chair detection dataset from scenes in real furnished homes. We evaluate runtime characteristics using a desktop GPU, an embedded ML accelerator, and a robot CPU, demonstrating that our method results in significant detection speedups (2X or more) for embedded systems with only a modest decrease in detection quality. Our work represents a new approach for practitioners to optimize models for embedded systems by maintaining and exploiting input sparsity throughout their entire pipeline to reduce runtime and resource usage while preserving detection performance.

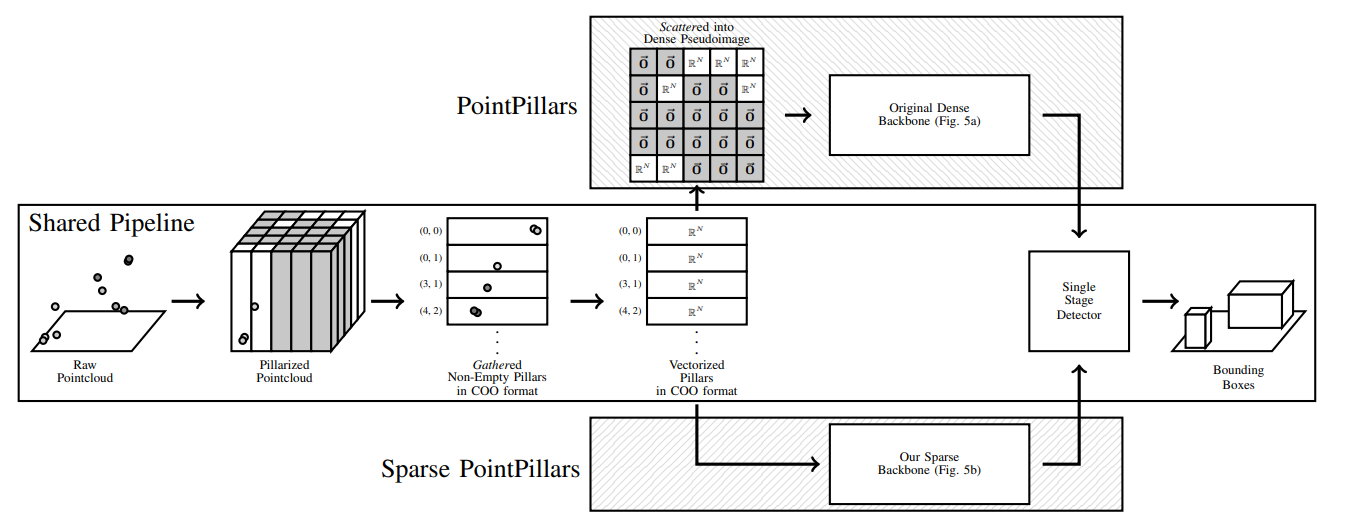

PointPillars, a popular 3D object detector, consumes a pointcloud, converts its sparse set of non-empty pillars into a sparse COO matrix format, vectorizes these pillars, then converts back to a dense matrix to run its dense convolutional Backbone. In improving runtimes for Sparse PointPillars, our first key insight is we can leave the vectorized matrix in its sparse format and exploit this sparsity in our Backbone, allowing us to skip computation in empty regions of the pseudoimage.

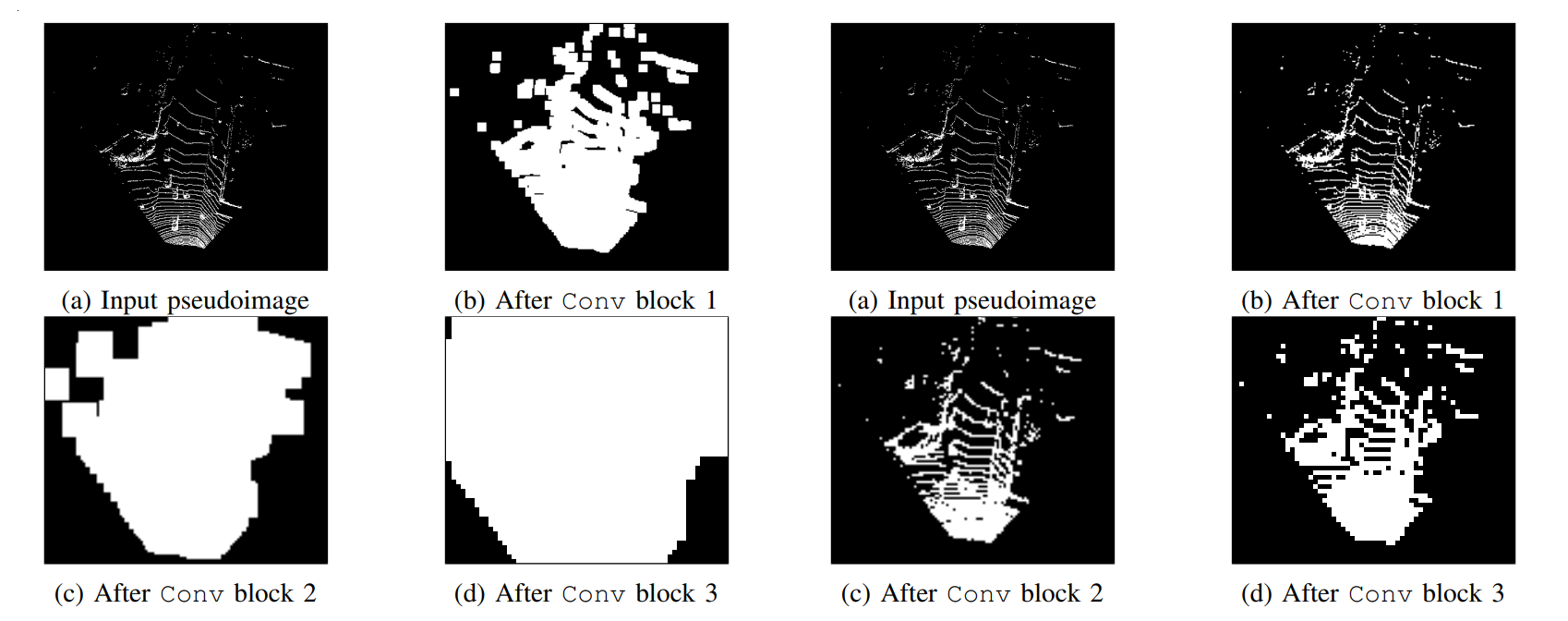

Our second key insight is that we want to maintain this sparsity to ensure successive layers of the Backbone are also efficient. We do this via our new Backbone which utilizes carefully placed sparse and submanifold convolutions. Left image shows the PointPillars’ dense Backbone pseudoimage smearing, right shows our sparse Backbone’s sparsity preservation.

Full details on the Backbone design plus ablative studies are available in the paper.

On our custom realistic service robot object detection benchmark, Matterport-Chair, we found that

All of this comes at the cost of 6% AP on the BEV benchmark and 4% AP on the 3D box benchmark.

Full experimental details, plus comparisons on KITTI, are available in the paper.

[Accepted IROS 2022 Paper PDF]

@inproceedings{vedder2022sparse,

title = {{Sparse PointPillars: Maintaining and Exploiting Input Sparsity to Improve Runtime on Embedded Systems}},

author = {Vedder, Kyle and Eaton, Eric},

booktitle = {Proceedings of the International Conference on Intelligent Robots and Systems (IROS)},

year = {2022},

website = {http://vedder.io/sparse_point_pillars.html},

pdf = {http://vedder.io/publications/sparse_point_pillars_iros_2022.pdf}

}

https://github.com/kylevedder/SparsePointPillars

Matterport-Chair was generated using MatterportDataSampling, our utility for generating supervised object detection datasets from Matterport3D.

Invited talk at the 3D-Deep Learning for Automated Driving workshop of IEEE Intelligent Vehicles Symposium 2022:

One Minute Overview Video:

Three Minute Overview Video: